Ever wondered how your computer’s brain, the CPU, juggles so many tasks at once? It all comes down to a highly organized process. The specific component that holds instructions just before they are processed by the Arithmetic Logic Unit (ALU) is the Instruction Register (IR). This small but mighty part ensures that your processor executes commands smoothly and efficiently, acting as a crucial waiting room for tasks. Understanding its role is key to grasping how computers work.

What is an Instruction Register (IR)?

The Instruction Register, often abbreviated as IR, is a special high-speed storage location within the processor itself. Think of it as the CPU’s immediate to-do list. Its primary job is to hold the single instruction that is currently being decoded or executed.

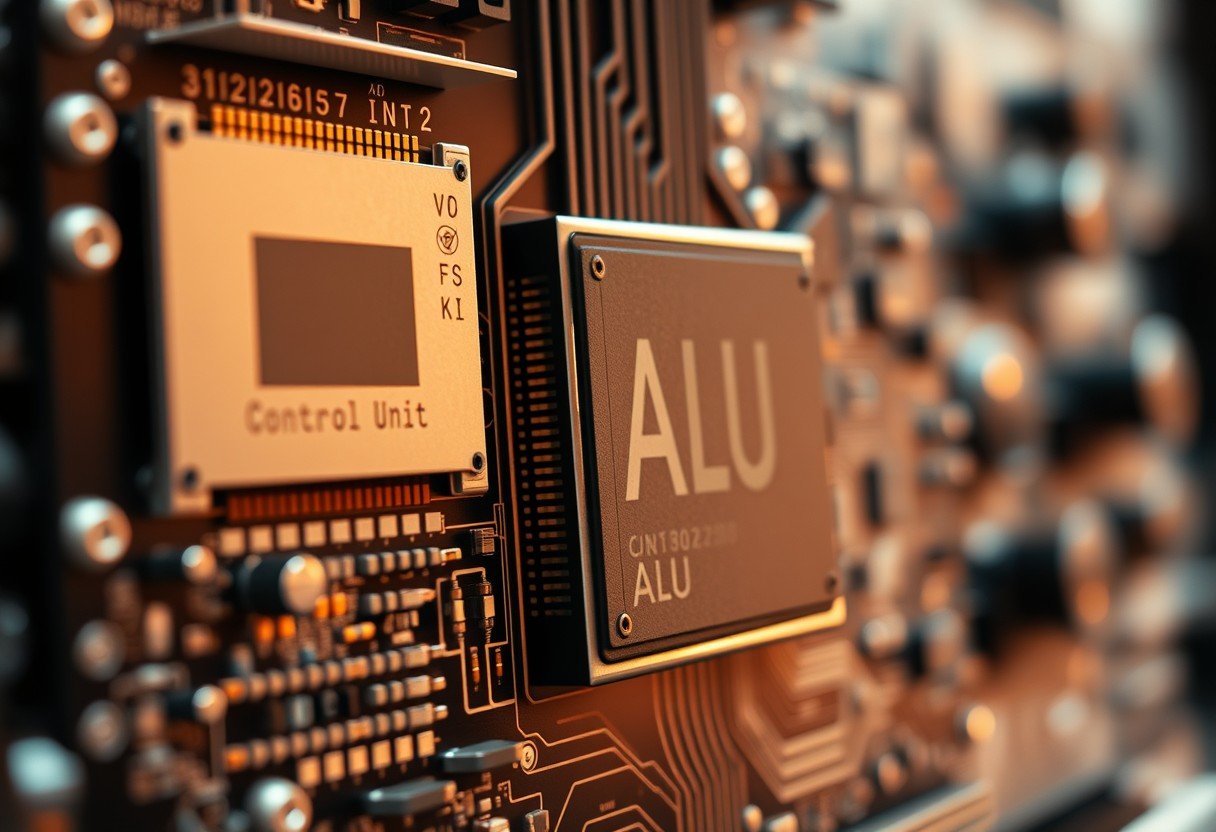

When the processor fetches an instruction from memory, it is loaded directly into the IR. The control unit then reads the instruction from the IR to figure out what operation needs to be performed. This could be anything from adding two numbers to moving data from one place to another.

The Instruction Register is designed to hold only one instruction at a time. This might sound inefficient, but it is a critical part of a very fast and organized process known as the instruction cycle. By isolating the current instruction, the CPU can focus on executing it correctly before moving on to the next one in the sequence.

How the Fetch-Decode-Execute Cycle Works

The seamless operation of your computer relies on a fundamental process called the fetch-decode-execute cycle. This three-step loop is the core of what a CPU does, repeated billions of times per second to run your programs. The Instruction Register is central to this cycle.

The process begins with the fetch stage, where the CPU retrieves the next instruction from the computer’s memory. The address of this instruction is stored in another register called the Program Counter (PC). Once fetched, the instruction is placed into the Instruction Register (IR).

Next comes the decode stage. The Control Unit (CU) examines the instruction held in the IR. It interprets the binary code to understand what action is required and which parts of the CPU are needed to carry it out. This is like a translator converting a command into a set of signals the hardware can understand. The final stage is execution.

- Fetch: The processor retrieves an instruction from memory and stores it in the Instruction Register.

- Decode: The Control Unit interprets the instruction in the IR to determine the required action.

- Execute: The instruction is carried out. This often involves the Arithmetic Logic Unit (ALU) performing a calculation or a logical operation.

Once the instruction is executed, the cycle starts all over again with the next instruction pointed to by the Program Counter. This relentless cycle is what allows your computer to perform complex tasks.

The Role of the Instruction Queue in Modern CPUs

While the Instruction Register holds the single instruction being worked on, modern processors need a more advanced system to keep up with demanding tasks. This is where the instruction queue comes in. An instruction queue is like a waiting line for instructions.

Instead of fetching one instruction at a time, the processor’s control unit can pre-fetch several upcoming instructions from memory and store them in this queue. This ensures that the ALU and other execution units are never idle. An effective instruction queue enhances processor efficiency by allowing the CPU to manage multiple instructions simultaneously.

This concept is a cornerstone of pipeline architecture, a technique used in virtually all modern CPUs. Pipelining allows the processor to work on different stages of multiple instructions at the same time. For instance, while one instruction is being executed, the next one can be decoded, and the one after that can be fetched. The instruction queue feeds this pipeline, ensuring a constant flow of work and maximizing throughput.

Other Key Players: Registers and Cache Memory

The Instruction Register doesn’t work in isolation. It is part of a larger system of memory and storage components that work together to feed the processor. The two other critical players in this hierarchy are general-purpose registers and cache memory. Understanding their roles helps clarify how instructions move through the system.

Registers are the fastest type of memory available to the CPU. They are small storage locations built directly into the processor chip. Besides the IR, there are other registers for holding data, memory addresses, and results of calculations. Because they are so close to the ALU, access is nearly instantaneous.

Cache memory is a small amount of very fast memory that sits between the CPU and the main memory (RAM). It stores copies of frequently used data and instructions from RAM. When the CPU needs an instruction, it checks the cache first. Since cache is much faster than RAM, this significantly speeds up the fetching process and prevents the CPU from waiting.

| Storage Type | Speed | Size | Location |

|---|---|---|---|

| Registers | Fastest | Very Small (Bytes) | Inside the CPU |

| Cache Memory | Very Fast | Small (Megabytes) | On or very close to the CPU |

| Main Memory (RAM) | Slower | Large (Gigabytes) | On the motherboard |

Why is Instruction Storage so Important for Performance?

The way a processor stores and manages instructions has a massive impact on its overall performance. The goal is always to keep the execution units, especially the ALU, as busy as possible. Any delay in getting the next instruction to the ALU results in wasted time and slower performance.

Efficient instruction storage mechanisms like caches and instruction queues are designed to reduce latency. Latency is the time it takes for an instruction to travel from memory to the processor. By pre-fetching instructions and storing them in high-speed caches or queues close to the CPU, the processor avoids the long wait times associated with retrieving them from slower main memory.

This not only reduces delays but also enhances throughput, which is the number of instructions a processor can complete in a given period. A well-managed flow of instructions allows the CPU to execute operations in parallel, making it more productive. For you, this translates to a faster, more responsive computer that can handle complex applications and multitasking without slowing down.

How Modern Processors Handle Instructions Differently

Processor architecture has evolved significantly over the years. Traditional designs had a simple, linear approach: fetch one instruction, decode it, execute it, and then repeat. This was effective but often left parts of the CPU idle while waiting for the next step.

Modern architectures are far more sophisticated. They use advanced techniques like out-of-order execution, where the processor can execute instructions in a different order than the program specifies, as long as it doesn’t change the final result. This allows the CPU to work on available instructions while waiting for data needed for an earlier one.

Furthermore, today’s CPUs are almost all multi-core, meaning they have multiple independent processing units on a single chip. Each core has its own set of registers, caches, and execution units. This allows for true parallel processing, where multiple instruction streams can be executed at the same time. These advancements, combined with deep instruction pipelines and intelligent pre-fetching algorithms, have led to the massive performance gains we see in computers today.

Frequently Asked Questions

What component of a processor holds instructions waiting to be processed by the ALU?

The primary component that holds the single, current instruction waiting for the ALU is the Instruction Register (IR). However, modern CPUs also use an instruction queue to hold multiple upcoming instructions to ensure the ALU is never idle.

How does the instruction register interact with the ALU?

The instruction register holds the instruction while the control unit decodes it. Based on the decoded instruction, the control unit directs the necessary data to the ALU, which then performs the required arithmetic or logical operation.

Can the instruction register hold multiple instructions at once?

No, the instruction register is specifically designed to hold only one instruction at a time. To handle multiple instructions, processors use techniques like instruction pipelining and instruction queues, which line up several instructions at different stages of processing.

What is the difference between a register and cache memory?

Registers are the fastest, smallest memory units located directly inside the CPU core, used for holding the data the CPU is actively working on. Cache is a slightly larger, fast memory that sits between the CPU and main memory (RAM) to store frequently accessed data and instructions, reducing retrieval time.

Why is the fetch-decode-execute cycle important?

This cycle is the fundamental operational process of a CPU. It is the sequence of steps the processor follows to retrieve an instruction from memory, understand what it means, and then carry it out, allowing your computer to run programs and perform tasks.

Leave a Comment