When you analyze data, you need to understand how spread out the numbers are. Two common tools for this are the range and the standard deviation. While the range is simple to calculate, it often gives a misleading picture. This article explains why standard deviation is almost always a better choice for understanding the true variability in your dataset, helping you make more accurate and informed decisions.

What Is the Range in Statistics?

The range is the simplest way to measure the spread of data. You find it by taking the highest value in a dataset and subtracting the lowest value. It gives you a quick idea of how wide the data spreads from one end to the other.

For example, if the test scores in a class are 65, 70, 78, 85, and 95, the range is 95 – 65 = 30. This tells you the scores span a 30-point difference.

While easy to find, this simplicity is also its biggest weakness. The range only uses two data points and ignores everything in between, which can hide important details about how the data is actually distributed.

What Is the Standard Deviation?

Standard deviation is a more powerful measure of spread. It tells you the average distance of each data point from the dataset’s mean (or average). A small standard deviation means the data points are clustered closely around the mean, while a large one means they are spread far apart.

Calculating it is more complex than finding the range. It involves finding the average of the squared differences from the mean. However, this extra work pays off because it provides a much more accurate and detailed view of your data’s consistency.

Standard deviation gives you a comprehensive understanding of data variability because it considers every single value in the dataset. This makes it a cornerstone of many statistical analyses.

The Main Problem with Using Just the Range

The biggest limitation of the range is that it provides very limited information. It tells you the width of your data but nothing about the shape or distribution of the values within that width. Two datasets can have the same range but look completely different.

Consider these two sets of numbers:

- Set A: 1, 2, 3, 4, 10

- Set B: 1, 6, 6, 7, 10

Both datasets have a range of 9 (10 – 1 = 9). However, in Set A, most values are clustered at the low end with one outlier. In Set B, the values are more spread out in the middle. The range fails to capture this crucial difference, but the standard deviation would clearly show that Set B has less variability.

This oversight can lead to incorrect conclusions. You might think the data is widely dispersed when, in reality, most of it is very consistent, with just one or two extreme values skewing the range.

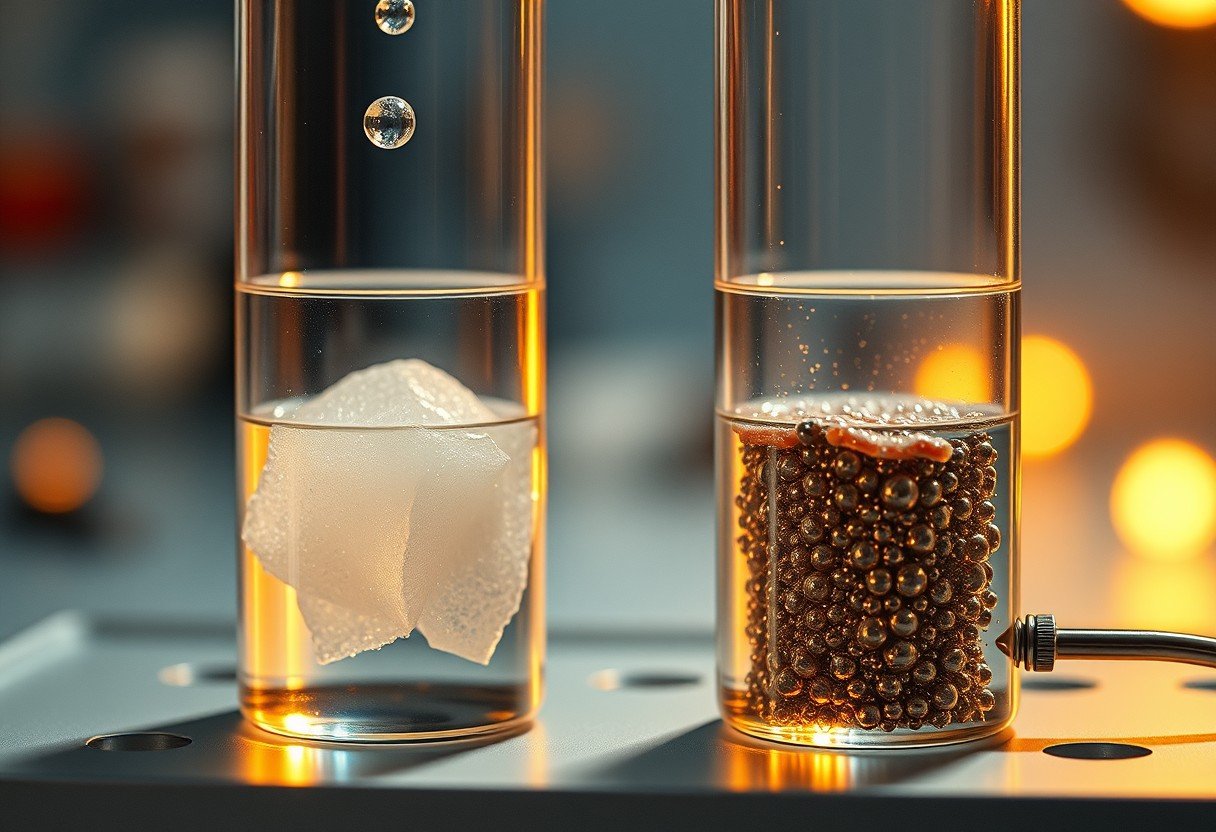

Why Outliers Distort the Range so Much

The range is extremely sensitive to outliers, which are values that are unusually high or low compared to the rest of the data. Since the range is calculated using only the maximum and minimum values, a single outlier can dramatically inflate it.

Imagine a dataset of employee salaries where most people earn between $50,000 and $70,000, but the CEO earns $1,000,000. The range would be huge and would not accurately represent the salary spread for the typical employee.

Standard deviation, on the other hand, is less affected by outliers. While an extreme value will influence the standard deviation, its impact is moderated because all other data points are included in the calculation. This makes standard deviation a more stable and reliable measure of dispersion.

How Standard Deviation Gives a Fuller Picture

Standard deviation provides a complete representation of data variability by accounting for every value. It shows how data points cluster around the average, offering insights into the consistency of the dataset. This comprehensive view is essential for accurate data interpretation and is why it is used in so many statistical methods, from hypothesis testing to regression analysis.

Here is a quick comparison:

| Feature | Range | Standard Deviation |

|---|---|---|

| Data Points Used | Only the maximum and minimum | All data points |

| Sensitivity to Outliers | Very high | Moderate |

| Information Provided | Only the total spread | Average deviation from the mean |

| Usefulness | Quick, simple estimate | In-depth statistical analysis |

This table clearly shows why standard deviation is the superior choice for most analytical purposes.

When Is It Okay to Use the Range?

Despite its limitations, the range can be useful in specific situations. It is acceptable when you need a quick, rough estimate of spread, especially in the early stages of data exploration.

You might use the range if you are working with a small, simple dataset that you know does not contain any extreme outliers. For example, in a quality control process, you might check if all products fall within a certain measurement range. In this case, the extremes are exactly what you care about.

However, for any serious analysis where understanding the true distribution and consistency of data is important, you should move beyond the range. Relying solely on the range in complex datasets can lead to flawed interpretations and poor decisions.

Frequently Asked Questions

Why is the range less informative than the standard deviation?

The range only uses the highest and lowest values, ignoring the distribution of all other data points. Standard deviation considers every data point and its distance from the average, providing a much more complete picture of variability.

How do outliers affect the range compared to the standard deviation?

The range is highly sensitive to outliers because a single extreme value will define one of its boundaries. Standard deviation is less affected because it averages the deviations of all data points, which moderates the impact of a single outlier.

Does the range provide information about the data’s shape?

No, the range tells you nothing about whether the data is clumped together, spread out evenly, or skewed to one side. It only provides the total width of the data spread.

In what situations is the range still useful?

The range is useful for getting a quick, simple estimate of spread in small datasets without outliers. It is also helpful in quality control or when you are specifically interested in the extreme values, like a temperature range.

What does a high standard deviation tell you?

A high standard deviation indicates that the data points are spread out over a wider range of values and are far from the mean. This suggests greater variability or less consistency within the dataset.

Should I ever report both the range and the standard deviation?

Yes, reporting both can sometimes provide a more complete story. The range gives the full extent of the data, while the standard deviation describes the typical spread. Together, they can offer complementary insights into your data’s distribution.

Leave a Comment